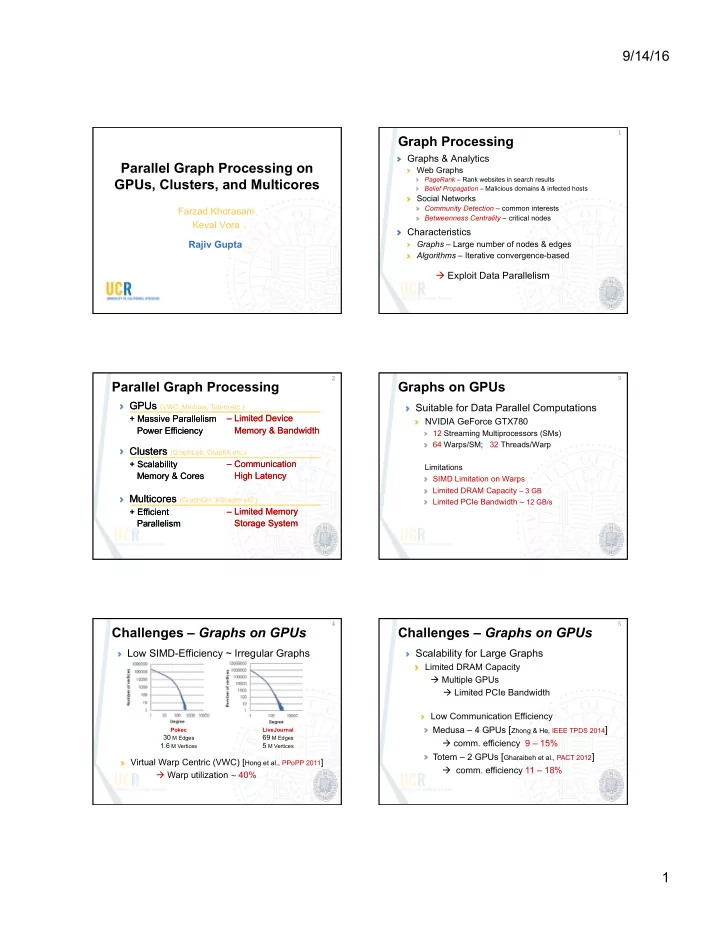

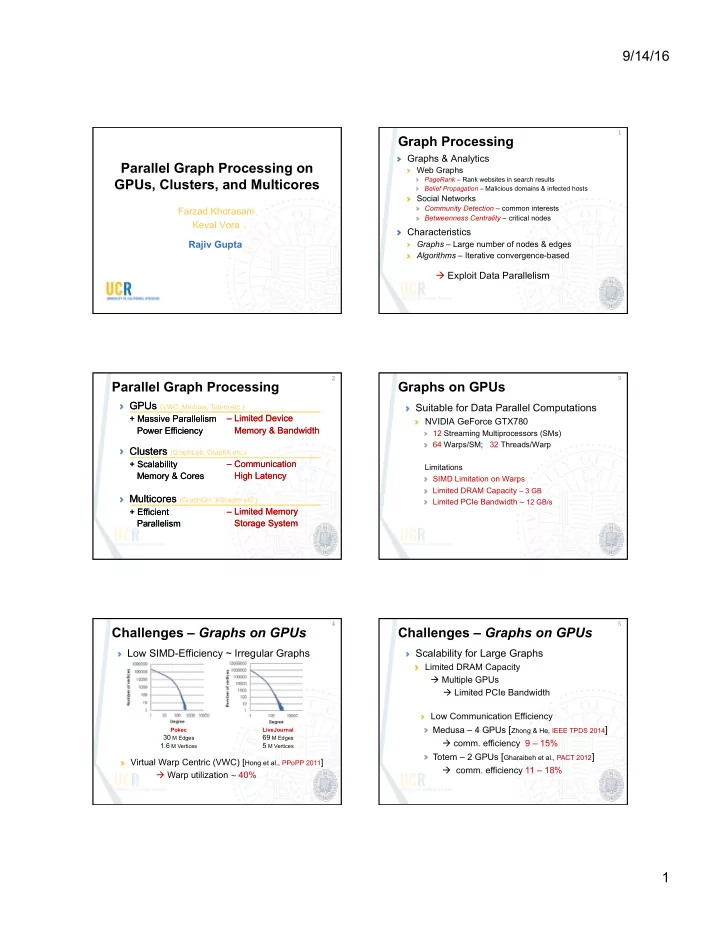

9/14/16 1 ¡ Graph Processing Graphs & Analytics Parallel Graph Processing on Web Graphs PageRank – Rank websites in search results GPUs, Clusters, and Multicores Belief Propagation – Malicious domains & infected hosts Social Networks Community Detection – common interests Farzad Khorasani Betweenness Centrality – critical nodes Keval Vora Characteristics Rajiv Gupta Graphs – Large number of nodes & edges Algorithms – Iterative convergence-based à Exploit Data Parallelism 2 ¡ 3 ¡ Parallel Graph Processing Graphs on GPUs GPUs GPUs (VWC, Medusa, Totem etc.) Suitable for Data Parallel Computations + Massive Parallelism + Massive Parallelism – Limited Device – Limited Device NVIDIA GeForce GTX780 Power Efficiency Power Efficiency Memory & Bandwidth Memory & Bandwidth 12 Streaming Multiprocessors (SMs) 64 Warps/SM; 32 Threads/Warp Clusters (GraphLab, GraphX etc.) Clusters – Communication – Communication + Scalability + Scalability Limitations High Latency High Latency Memory & Cores Memory & Cores SIMD Limitation on Warps Limited DRAM Capacity – 3 GB Multicores (GraphChi, XStream etc.) Multicores Limited PCIe Bandwidth – 12 GB/s + Efficient + Efficient – Limited Memory – Limited Memory Parallelism Parallelism Storage System Storage System 4 ¡ 5 ¡ Challenges – Graphs on GPUs Challenges – Graphs on GPUs Low SIMD-Efficiency ~ Irregular Graphs Scalability for Large Graphs Limited DRAM Capacity à Multiple GPUs à Limited PCIe Bandwidth Low Communication Efficiency Medusa – 4 GPUs [ Zhong & He, IEEE TPDS 2014 ] Pokec LiveJournal 30 M Edges 69 M Edges à comm. efficiency 9 – 15% 1.6 M Vertices 5 M Vertices Totem – 2 GPUs [ Gharaibeh et al., PACT 2012 ] Virtual Warp Centric (VWC) [ Hong et al., PPoPP 2011 ] à comm. efficiency 11 – 18% à Warp utilization ~ ¡ 40% 1

9/14/16 6 ¡ 7 ¡ Iterative Graph Processing Our Approach Single Source Shortest Path (SSSP) Warp Segmentation source Variable number of threads given to a Iteration V 0 V 1 V 2 V 3 V 4 4 V 0 0 0 V 4 4 4 vertex to process its incoming edges 0 0 ∞ ∞ ∞ ∞ 3 4 à High SIMD-Efficiency 2 2 1 0 4 2 ∞ ∞ 1 1 2 V 1 1 V 2 2 2 0 3 2 7 5 5 Vertex Refinement 3 3 0 3 2 6 5 Eliminates unnecessary communication V 3 3 3 = min( V 1 + 3, V 2 + 5 ) à High Communication Efficiency 8 ¡ 9 ¡ Compressed Sparse Row (CSR) Existing Works – Static Graph Representation Each vertex is assigned the same (fixed) number of threads a 0 1 2 3 4 V 0 V 4 V V 0 V 1 V 2 V 3 V 4 [ Harish & Narayanan , HiPC 2007 ] – 1 thread h b c e PTR 0 1 4 5 7 8 [ Hong et al, PPoPP 2011 ] – power of 2 threads V 1 V 2 d [ Kim & Batten, MICRO 2014 ] – a thread-block 4 0 4 2 0 1 2 2 g f E a b c d e f g h à Leads to SIMD inefficiency V 3 10 ¡ 11 ¡ Static – too few / many threads Warp Segmentation 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 V V V 0 V 1 V 0 V 1 V 0 V 0 V 1 V 1 PTR PTR 0 6 8 0 6 8 N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 E N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 E . . . . . . . . . . . . . . . . Assign 4 threads/vertex Assign warp /vertex-group Lane 0 Lane 1 Lane 2 Lane 3 Lane 4 Lane 5 Lane 6 Lane 7 Lane 0 Lane 1 Lane 2 Lane 3 Lane 4 Lane 5 Lane 6 Lane 7 N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 C 0 C 1 C 2 C 3 C 6 C 7 Time N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 C 0 C 1 C 2 C 3 C 4 C 5 C 6 C 7 Time R R R R R R R R R R R R R R R R R F R F R F R F R R R R C 4 C 5 R F R F R F R F R R R F R F 2

9/14/16 12 ¡ 13 ¡ Warp Segmentation Warp Segmentation 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 V V V 0 V 1 V 0 V 1 V 0 V 0 V 1 V 1 PTR 0 6 8 PTR 0 6 N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 E E N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 . . . . . . . . 3 Operation N 0 N 1 N 2 N 3 N 4 N 5 N 6 N 7 Operation N 3 [0,8] [0,8] [0,8] [0,8] [0,8] [0,8] [0,8] [0,8] [0,8] [0,4] [0,4] [0,4] [0,4] [0,4] [0,4] [0,4] [0,4] Binary Search [0,4] Binary Search E item index in PTR E item index in PTR [0,2] [0,2] [0,2] [0,2] [0,2] [0,2] [0,2] [0,2] [0,2] [0,1] [0,1] [0,1] [0,1] [0,1] [0,1] [1,2] [1,2] [0,1] Belonging Vertex Index 0 0 0 0 0 0 1 1 Belonging Vertex Index 0 Index inside Segment Index inside Segment 5 4 3 2 1 0 1 0 2 6 – 3 – 1 from right from right Index inside Segment 3 – 0 0 1 2 3 4 5 0 1 Index inside Segment 3 from left from left 2 + 3 + 1 6 6 6 6 6 6 2 2 6 Segment Size Segment Size 14 ¡ 15 ¡ Experimental Setup Efficiency of Warp Segmentation NVIDIA GeForce GTX780 No shared memory atomics for reduction. No synchronization primitives used. Programs Graphs (#V, #E) All memory accesses are coalesced except Breadth First BFS Search RM33V335E 33m, 335m for accessing neighbor’s values. Connected CC Orkut 3.07m, 234m Exploits instruction-level parallelism. Components LiveJournal 4.85m, 69m NN Neural Network SocPokec 1.63m, 30.6m PR PageRank Single Source RoadNetCA 1.97m, 5.5m SSSP Shortest Path Amazon0312 0.40m, 3.2m Single Source SSWP Widest Path 16 ¡ 17 ¡ Speedups Over VWC Warp Execution Efficiency VWC-2 VWC-4 VWC-8 VWC-16 VWC-32 Warp Seg. Average for Programs Average for Graphs 80 Warp Execution Efficiency (%) BFS 1.27x – 2.60x RM33V335E 1.23x – 1.56x 60 CC 1.33x – 2.90x Orkut 1.15x – 1.99x 40 NN 1.21x – 2.70x LiveJournal 1.29x – 1.99x PR 1.22x – 2.68x SocPokec 1.27x – 1.77x 20 SSSP 1.31x – 2.76x RoadNetCA 1.24x – 9.90x 0 E t E E E E l c r A e 2 u a e 5 1 1 1 4 n e C g l 1 k k t 3 r 0 0 0 3 r t t o 3 SSWP 1.28x – 2.80x Amazon0312 1.53x – 2.68x O u o w i e 0 3 2 2 2 1 o o P N n V m V V V V T G J c o 3 5 5 6 6 s d o e o g a b z 3 2 2 1 1 e C v S g o a M R M M M i W m L i R R E R R R H A SSSP 3

9/14/16 18 ¡ 19 ¡ Scalability – Multiple-GPUs Scalability – Multiple-GPUs V V 0 V 1 V 2 V 3 V 4 V 5 V 6 V 0 V 2 V 4 V 6 V 0 V 2 V 4 V 6 PTR 0 0 1 2 4 6 7 9 V 1 V 3 V 5 a b c d e f g f g E V 1 V 3 V 5 0 0 1 4 2 5 3 4 5 Device 0 Device 1 PCIe GPU DRAM Bandwidth ~12 ~300 V 0 V 1 V 2 V 3 V 4 V 5 V 6 V 0 V 1 V 2 V 3 V 4 V 5 V 6 (GB/s) 0 0 1 2 4 0 2 3 5 a b c d e f g f g 0 0 1 4 2 5 3 4 5 Medusa - ALL Method Totem - Maximal Subset (MS) 20 ¡ 21 ¡ [ IEEE TPDS 2014 ] [ PACT 2012 ] V 0 V 1 V 2 V 3 V 2 V 3 V 4 V 5 V 6 V 4 Device 0 Device 1 Device 0 Device 1 V 0 V 1 V 2 V 3 V 4 V 5 V 6 V 0 V 1 V 2 V 3 V 4 V 5 V 6 V 0 V 1 V 2 V 3 V 4 V 5 V 6 V 0 V 1 V 2 V 3 V 4 V 5 V 6 0 0 1 2 4 0 2 3 5 0 0 1 2 4 0 2 3 5 a b c d e f g f g a b c d e f g f g 0 0 1 4 2 5 3 4 5 0 0 1 4 2 5 3 4 5 à Communication Efficiency 11-18% à Communication Efficiency 9-15% 22 ¡ 23 ¡ Vertex Refinement ¡ (VR) Online Vertex Refinement T #0 T#1 T#2 T#3 T#4 T#5 T#6 T#7 Offline Vertex Refinement V 0 V 1 V 2 V 3 V 4 V 5 V 6 V 7 Marks Boundary Vertices Device V 0 V 3 V 7 Outbox Online Vertex Refinement V 0 V 1 V 2 V 3 V 4 V 6 V 7 On-the-fly by CUDA kernel Operation V 5 Y N N Y N N N Y Is (Updated & Marked) Look for updates in values Binary Reduction 3 3 3 3 3 3 3 3 Reserve Outbox Region A = atomicAdd( deviceOutboxMovingIndex, 3 ); Shuffle A A A A A A A A A Binary Prefix Sum 0 1 1 1 2 2 2 2 Fill Outbox Region O[A+0]=V 0 O[A+1]=V 3 O[A+2]=V 7 4

Recommend

More recommend