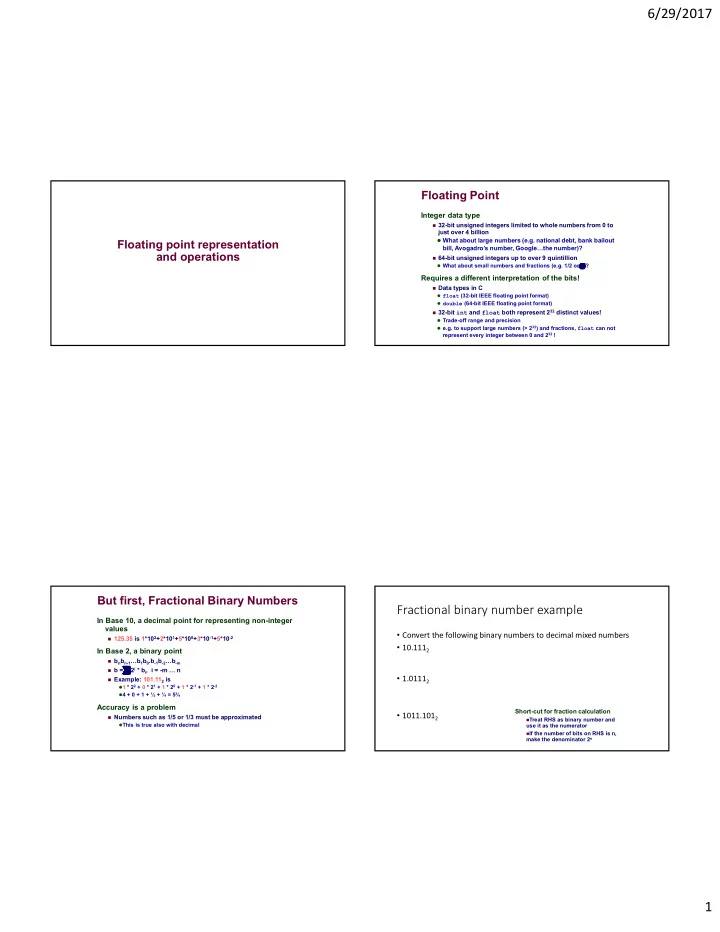

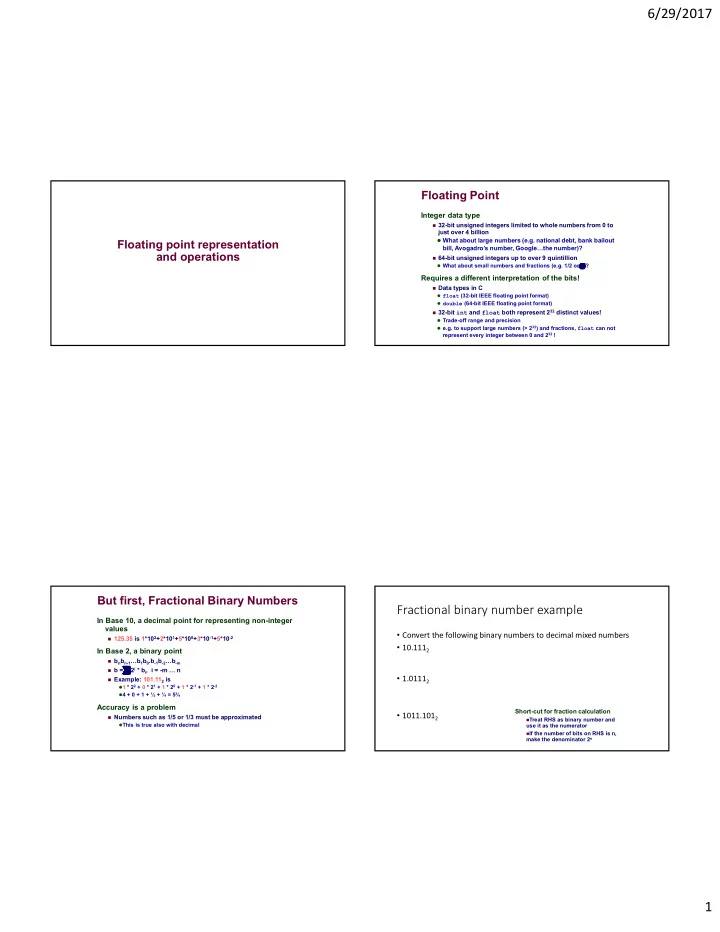

6/29/2017 Floating Point Integer data type 32-bit unsigned integers limited to whole numbers from 0 to just over 4 billion What about large numbers (e.g. national debt, bank bailout Floating point representation bill, Avogadro’s number, Google…the number)? and operations 64-bit unsigned integers up to over 9 quintillion What about small numbers and fractions (e.g. 1/2 or )? Requires a different interpretation of the bits! Data types in C float (32-bit IEEE floating point format) double (64-bit IEEE floating point format) 32-bit int and float both represent 2 32 distinct values! Trade-off range and precision e.g. to support large numbers (> 2 32 ) and fractions, float can not represent every integer between 0 and 2 32 ! But first, Fractional Binary Numbers Fractional binary number example In Base 10, a decimal point for representing non-integer values • Convert the following binary numbers to decimal mixed numbers 125.35 is 1*10 2 +2*10 1 +5*10 0 +3*10 -1 +5*10 -2 • 10.111 2 In Base 2, a binary point b n b n-1 …b 1 b 0 .b -1 b -2 …b -m b = 2 i * b i , i = -m … n • 1.0111 2 Example: 101.11 2 is 1 * 2 2 + 0 * 2 1 + 1 * 2 0 + 1 * 2 -1 + 1 * 2 -2 4 + 0 + 1 + ½ + ¼ = 5¾ Accuracy is a problem Short-cut for fraction calculation • 1011.101 2 Numbers such as 1/5 or 1/3 must be approximated Treat RHS as binary number and This is true also with decimal use it as the numerator If the number of bits on RHS is n, make the denominator 2 n 1

6/29/2017 Floating Point overview IEEE Floating-Point Problem: how can we represent very large or very small Specifically, IEEE FP represents numbers in the form numbers with a compact representation? V = (-1) s * M * 2 E Current way with int Three fields 5*2 100 as 1010000….000000000000? (103 bits) s is sign bit: 1 == negative, 0 == positive Not very compact, but can represent all integers in between M is the significand , a fractional number Another 5*2 100 as 101 01100100 (i.e. x=101 and y=01100100)? (11 bits) E is the, possibly negative, exponent Compact, but does not represent all integers in between Basis for IEEE Standard 754, “IEEE Floating Point” Supported in most modern CPUs via floating-point unit Encodes rational numbers in the form (M * 2 E ) Large numbers have positive exponent E Small numbers have negative exponent E Rounding can lead to errors IEEE Floating Point Encoding IEEE Floating-Point s exp frac Depending on the exp value, the bits are interpreted differently Normalized (most numbers): exp is neither all 0’s nor all 1’s s is sign bit E is ( exp – Bias) exp field is an encoding to derive E » E is in biased form: •Bias =127 for single precision frac field is an encoding to derive M •Bias =1023 for double precision Sizes » Allows for negative exponents Single precision: 8 exp bits, 23 frac bits (32 bits total) M is 1 + frac » C type float Denormalized (numbers close to 0): exp is all 0’s Double precision: 11 exp bits, 52 frac bits (64 bits total) E is 1-Bias » C type double » Not set to –Bias in order to ensure smooth transition from Normalized Extended precision: 15 exp bits, 63 frac bits M is frac » Found in Intel FPUs » Can represent 0 exactly » Stored in 80 bits (1 bit wasted) » Evenly spaced increments approaching 0 Special values: exp is all 1’s If frac == 0, then we have ± , e.g., divide by 0 If frac != 0, we have NaN (Not a Number), e.g., sqrt(-1) 2

6/29/2017 Encodings form a continuum Normalized Encoding Example Using 32-bit float Value + -Normalized +Denorm +Normalized -Denorm float f = 15213.0; /* exp=8 bits, frac=23 bits */ 15213 10 = 11101101101101 2 = 1.1101101101101 2 X 2 13 (normalized form) NaN NaN Significand 0 +0 M = 1.1101101101101 2 frac = 11011011011010000000000 2 Exponent E = 13 Why two regions? Bias = 127 Exp = 140 = 10001100 2 As before Floating Point Representation : Allows 0 to be represented Smooth transition to evenly spaced increments approaching 0 Hex: 4 6 6 D B 4 0 0 Encoding also allows magnitude comparison to be done via Binary: 0100 0110 0110 1101 1011 0100 0000 0000 integer unit 140: 100 0110 0 15213: 1 110 1101 1011 01 http://thefengs.com/wuchang/courses/cs201/class/05/normalized_float.c Distribution of Values Denormalized Encoding Example Using 32-bit float 7-bit IEEE-like format Value float f = 7.347e-39; /* 7.347*10 -39 */ e = 4 exponent bits f = 3 fraction bits http://thefengs.com/wuchang/courses/cs201/class/05/denormalized_float.c Bias is 7 (Bias is always set to half the range of exponent – 1) $ ./denormalized_float Number to convert: 7.347e-39 Best IEEE representation can do is: 7.346999e-39 Binary IEEE representation is: 0 00000000 10100000000000001110010 Interpretation: Sign = 0 E is (1-127) = -126 M is 1/2 + 1/8 + .. = 0.625 M*2^E = 0.625*(2^-126) 3

6/29/2017 7-bit IEEE FP format (Bias=7) Distribution of Values s exp frac E Value Number distribution gets denser toward zero 0 0000 000 -6 0 closest to zero 0 0000 001 -6 1/8*1/64 = 1/512 Denormalized 0 0000 010 -6 2/8*1/64 = 2/512 numbers … 0 0000 110 -6 6/8*1/64 = 6/512 largest denorm 0 0000 111 -6 7/8*1/64 = 7/512 0 0001 000 -6 8/8*1/64 = 8/512 smallest norm 0 0001 001 -6 9/8*1/64 = 9/512 … 0 0110 110 -1 14/8*1/2 = 14/16 closest to 1 below 0 0110 111 -1 15/8*1/2 = 15/16 Normalized 0 0111 000 0 8/8*1 = 1 numbers closest to 1 above 0 0111 001 0 9/8*1 = 9/8 0 0111 010 0 10/8*1 = 10/8 … 0 1110 110 7 14/8*128 = 224 largest norm 0 1110 111 7 15/8*128 = 240 0 1111 000 n/a inf Practice problem 2.47 Distribution of Values (close-up view) Consider a 5-bit IEEE floating point representation 1 sign bit, 2 exponent bits, 2 fraction bits, Bias = 1 Fill in the following table • 6-bit IEEE-like format s exp frac • e = 3 exponent bits Bits exp E frac M V • f = 2 fraction bits 1 3-bits 2-bits • Bias is 3 0 00 00 0 00 11 -1 -0.5 0 0.5 1 Denormalized Normalized Infinity 0 01 00 0 01 10 0 10 11 4

6/29/2017 Practice problem 2.47 Floating Point Operations Consider a 5-bit IEEE floating point representation FP addition is 1 sign bit, 2 exponent bits, 2 fraction bits, Bias = 1 Commutative: x + y = y + x Fill in the following table NOT associative: (x + y) + z != x + (y + z) (3.14 + 10 10 ) – 10 10 = 0.0, due to rounding Bits exp E frac M V 3.14 + (10 10 – 10 10 ) = 3.14 Very important for scientific and compiler programmers 0 00 00 0 0 0 0 0 FP multiplication 0 00 11 0 0 ¾ ¾ ¾ Is not associative Does not distribute over addition 10 20 * (10 20 – 10 20 ) = 0.0 0 01 00 1 0 0 1 1 10 20 * 10 20 – 10 20 * 10 20 = NaN Again, very important for scientific and compiler 0 01 10 1 0 ½ 1 ½ 1 ½ programmers 0 10 11 2 1 ¾ 1 ¾ 3 ½ Approximations and estimations Floating Point in C Famous floating point errors C guarantees two levels Patriot missile (rounding error from inaccurate float single precision representation of 1/10 in time calculations) double double precision 28 killed due to failure in intercepting Scud missile (2/25/1991) Casting between data types (not pointer types) Ariane 5 (floating point cast to integer for efficiency caused Casting between int , float , and double results in overflow trap) (sometimes inexact) conversions to the new representation Microsoft's sqrt estimator... float to int Not defined when beyond range of int Generally saturates to TMin or TMax double to int Same as with float int to double Exact conversion int to float Will round for large values (e.g. that require > 23 bits) 5

Recommend

More recommend