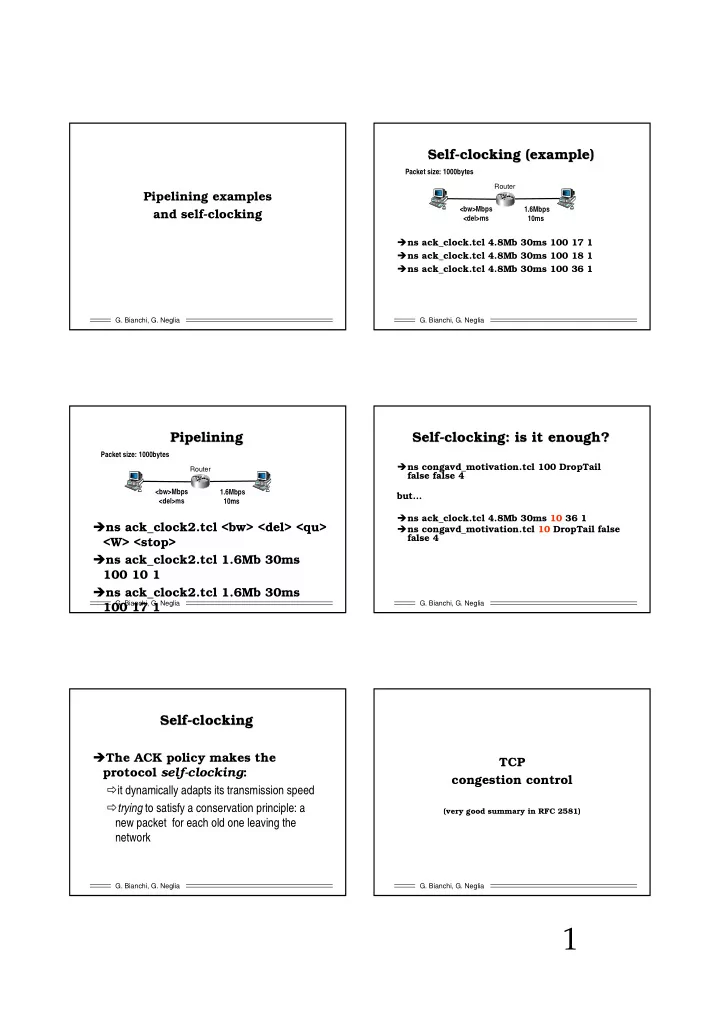

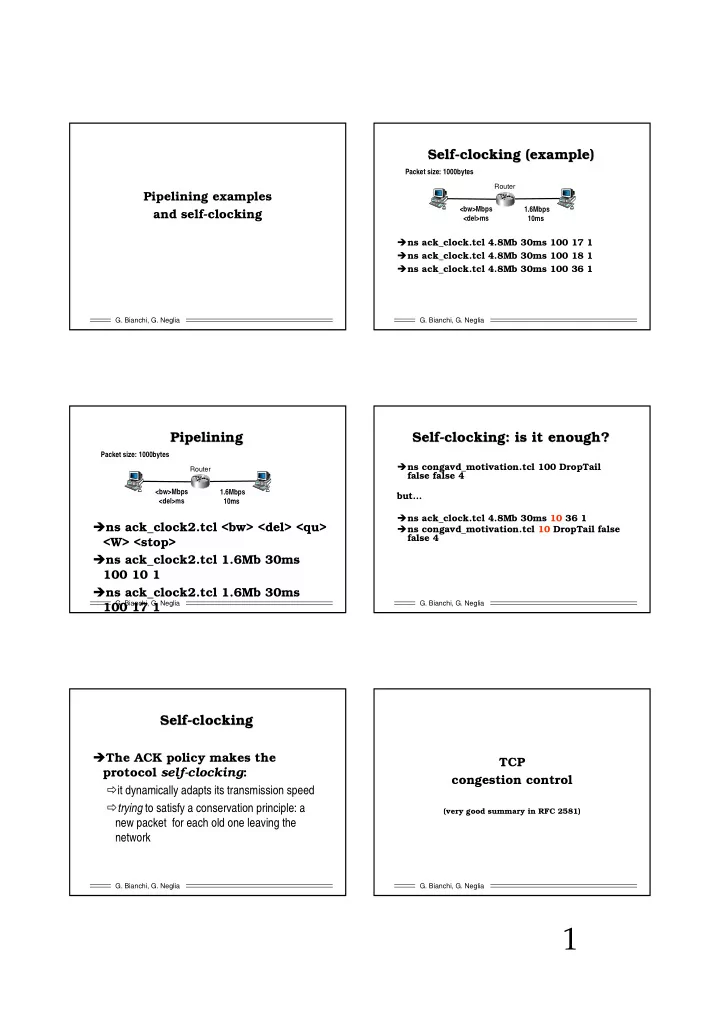

Self- -clocking (example) clocking (example) Self Packet size: 1000bytes Router Pipelining examples <bw>Mbps 1.6Mbps and self-clocking <del>ms 10ms � ns ack_clock.tcl 4.8Mb 30ms 100 17 1 � ns ack_clock.tcl 4.8Mb 30ms 100 18 1 � ns ack_clock.tcl 4.8Mb 30ms 100 36 1 G. Bianchi, G. Neglia G. Bianchi, G. Neglia Pipelining Self- -clocking: is it enough? clocking: is it enough? Pipelining Self Packet size: 1000bytes � ns congavd_motivation.tcl 100 DropTail Router false false 4 <bw>Mbps 1.6Mbps but… <del>ms 10ms � ns ack_clock.tcl 4.8Mb 30ms 10 36 1 � ns ack_clock2.tcl <bw> <del> <qu> � ns congavd_motivation.tcl 10 DropTail false false 4 <W> <stop> � ns ack_clock2.tcl 1.6Mb 30ms 100 10 1 � ns ack_clock2.tcl 1.6Mb 30ms G. Bianchi, G. Neglia G. Bianchi, G. Neglia 100 17 1 Self Self- -clocking clocking � The ACK policy makes the TCP protocol self-clocking : congestion control � it dynamically adapts its transmission speed � trying to satisfy a conservation principle: a (very good summary in RFC 2581) new packet for each old one leaving the network G. Bianchi, G. Neglia G. Bianchi, G. Neglia 1

TCP TCP approach approach for for detecting detecting The The problem problem of of congestion congestion and controlling and controlling congestion congestion SENDERs RECEIVERs Advertise large win (bulk flows) (large capacity) � IP protocol does not implement mechanisms to detect congestion in IP routers Several outstanding segments � Unlike other networks, e.g. ATM � necessary indirect means (TCP is an end-to-end protocol) � TCP approach: congestion detected by lack of acks » couldn’t work efficiently in the 60s & 70s (error prone transmission Internal lines) network » OK in the 80s & 90s (reliable transmission) congestion: » what about wireless networks??? - queues build up � Controlling congestion: use a SECOND window - delay increases (congestion window) - RTOs expire � Locally computed at sender - more segments transmitted -> more congestion! � Outstanding segments: min(receiver_window, congestion_window) G. Bianchi, G. Neglia G. Bianchi, G. Neglia The goal of congestion congestion control control The goal of Starting Starting a TCP a TCP transmission transmission SENDERs RECEIVERs � A new offered flow may suddenly (bulk flows) (large capacity) overload network nodes � receiver window is used to avoid recv buffer overflow � But it may be a large value (16-64 KB) Bottleneck link rate C � Idea: slow start � Start with small value of cwnd N=4 TCP connections � And increase it as soon as packets get through Each should transmit at C/4 rate. » Arrival of ACKs = no packet losts = no congestion Since: � Initial cwnd size: W MSS ⋅ � Just 1 MSS! thr ≈ RTT � Recent (1998) proposals for more aggressive starts (up to 4 Each should adapt W accordingly… MSS) have been found to be dangerous How sources can be lead to know the RIGHT value of W?? G. Bianchi, G. Neglia G. Bianchi, G. Neglia History of of congestion congestion control control Slow start – – exponential increase exponential increase History Slow start � Before 1986: the Internet meltdown! Conn request � ����������������� � No mechanisms employed to react to internal network ���������� ������� Conn granted ���� ������ congestion Request http obj Cwnd=1 � 1986: Slow Start + Congestion avoidance � ���� ���� �������� � Van Jacobson, TCP Berkeley � ����������� ��� �������� ��� � Proposes idea to make TCP reactive to congestion Cwnd=2 � 1988: Fast Retransmit (TCP Tahoe) � ���� ���������� � Van Jacobson, first implemented in 1988 BSD Tahoe ���������� Cwnd=3 � ���� ������� release Cwnd=4 � ����������� ���� � 1990: Fast Recovery (TCP Reno) ����� !! � Van Jacobson, first implemented in 1990 BSD Reno release � ����� ����"��� �������� ������� � 1995-1996: TCP NewReno ������������� � Floyd (based on Hoe’s idea), RFC 2582 � # ���������� ���� ���� � Today the de-facto standard … … … … … … … … … … G. Bianchi, G. Neglia G. Bianchi, G. Neglia 2

Detecting Detecting congestion congestion and and restarting restarting Simplified example Simplified example ( (overall overall) ) � Segment gets lost 16 Timeout: cwnd = 1 � Detected via RTO expiration 14 ssthresh=8 Congestion window cwnd (in MSS) Timeout: � Indirectly notifies that one of the network nodes along the path cwnd = 1 12 has lost segment ssthresh=6 » Because of full queue 10 � Restart from cwnd=1 (slow start) � But introduce a supplementary control: slow 8 start threshold 6 � sstresh = max(cwnd/2, 2MSS) � The idea is that we now KNOW that there is congestion in the 4 3 network, and we need to increase our rate in a more careful 2 manner… 1 � Ssthresh defines the “congestion avoidance” region 1 Number of transmissions G. Bianchi, G. Neglia G. Bianchi, G. Neglia What happens What happens AFTER RTO? AFTER RTO? Congestion avoidance avoidance Congestion ( (without without fast fast retransmit retransmit) ) � If cwnd < ssthresh � Slow start region: Increase rate exponentially Seq=50 Seq=100 � If cwnd >= ssthresh RTO Seq=150 � Congestion avoidance region : Increase rate linearly ack=100 Current cwnd = 6 � At rate 1 MSS per RTT ack=100 � Practical implementation: ack=100 Seq=350 cwnd += MSS*MSS/cwnd ack=100 ack=100 � Good approximation for 1 MSS per RTT ack=100 � Alternative (exact) implementations: count!! � Which initial ssthresh? set cwnd = 1 and rtx seq=100 » ssthresh initially set to 65535: unreachable! In essence, congestion avoidance is flow control imposed by sender ack=400! while advertised window is flow control imposed by receiver And then, restart normally with cwnd=2 and send seq=400,450 G. Bianchi, G. Neglia G. Bianchi, G. Neglia TCP TAHOE TCP TAHOE Congestion avoidance avoidance example example Congestion (with ( with fast fast retransmit retransmit) ) Cwnd = 1000 B = 1 MSS Seq=50 Seq=100 RTO Seq=150 Cwnd = 1000 + 1000x1000/1000 = 2000 ack=100 Current cwnd = 6 Cwnd=2000 + 1000x1000/2000 = 2500 ack=100 ack=100 Cwnd=2500 + 1000x1000/2500 = 2900 Seq=350 ack=100 set cwnd = 1 and rtx seq=100 ack=100 Seq=100 Cwnd=2900 + 1000x1000/2900 = 3245 ack=100 Cwnd= … = 3553 Cwnd= … = 3834 ack=400! And then, restart normally with cwnd=2 and send seq=400,450 Same as before, but shorter time to recover packet loss! G. Bianchi, G. Neglia G. Bianchi, G. Neglia 3

Recommend

More recommend