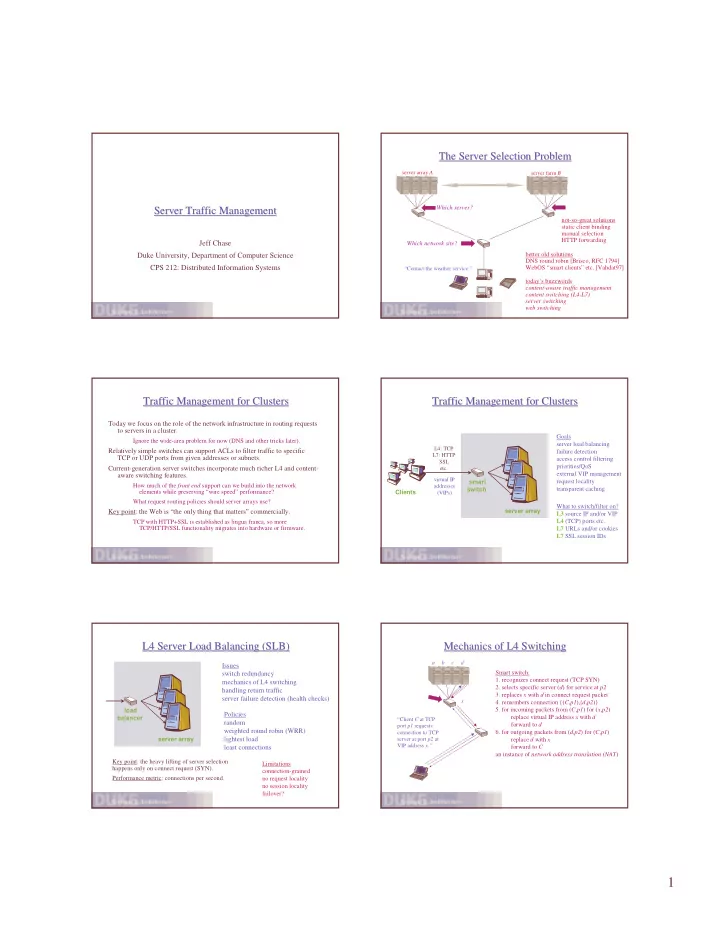

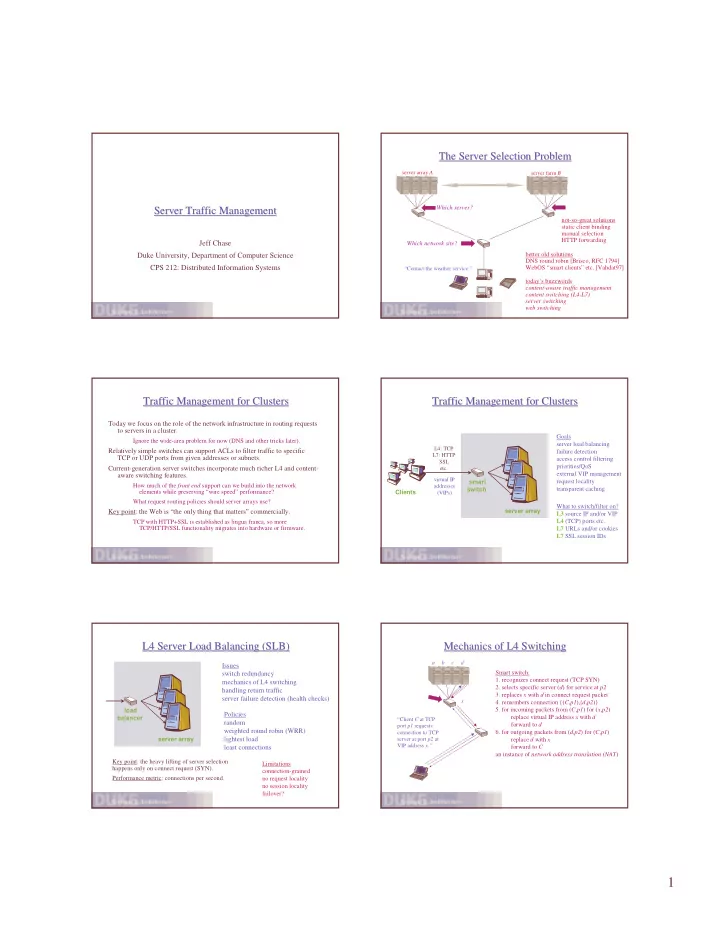

The Server Selection Problem The Server Selection Problem server array A server farm B Which server? Server Traffic Management Server Traffic Management not-so-great solutions static client binding manual selection HTTP forwarding Jeff Chase Which network site? Duke University, Department of Computer Science better old solutions DNS round robin [Brisco, RFC 1794] CPS 212: Distributed Information Systems WebOS “smart clients” etc. [Vahdat97] “Contact the weather service.” today’s buzzwords content-aware traffic management content switching (L4-L7) server switching web switching Traffic Management for Clusters Traffic Management for Clusters Traffic Management for Clusters Traffic Management for Clusters Today we focus on the role of the network infrastructure in routing requests to servers in a cluster. Goals Ignore the wide-area problem for now (DNS and other tricks later). server load balancing L4: TCP Relatively simple switches can support ACLs to filter traffic to specific failure detection L7: HTTP TCP or UDP ports from given addresses or subnets. access control filtering SSL priorities/QoS Current-generation server switches incorporate much richer L4 and content- etc. external VIP management aware switching features. virtual IP smart request locality How much of the front end support can we build into the network addresses switch transparent caching elements while preserving “wire speed” performance? Clients (VIPs) What request routing policies should server arrays use? What to switch/filter on? Key point: the Web is “the only thing that matters” commercially. server array L3 source IP and/or VIP TCP with HTTP+SSL is established as lingua franca, so more L4 (TCP) ports etc. TCP/HTTP/SSL functionality migrates into hardware or firmware. L7 URLs and/or cookies L7 SSL session IDs L4 Server Load Balancing (SLB) L4 Server Load Balancing (SLB) Mechanics of L4 Switching Mechanics of L4 Switching a b c d Issues Smart switch: switch redundancy 1. recognizes connect request (TCP SYN) mechanics of L4 switching 2. selects specific server ( d ) for service at p2 handling return traffic 3. replaces x with d in connect request packet server failure detection (health checks) x 4. remembers connection {( C , p1 ),( d , p2 )} load 5. for incoming packets from ( C , p1 ) for ( x , p2 ) Policies replace virtual IP address x with d balancer “Client C at TCP random forward to d port p1 requests weighted round robin (WRR) connection to TCP 6. for outgoing packets from ( d , p2 ) for ( C , p1 ) server array lightest load server at port p2 at replace d with x VIP address x.” forward to C least connections an instance of network address translation (NAT) Key point: the heavy lifting of server selection Limitations happens only on connect request (SYN). connection-grained Performance metric: connections per second. no request locality no session locality failover? 1

Handling Return Traffic Handling Return Traffic URL Switching URL Switching Idea: switch parses the HTTP request, fast retrieves the request URL, and uses the incoming traffic routes to smart switch dumb URL to guide server selection. switch a,b,c smart switch changes MAC address smart switch leaves dest VIP intact Example: Foundry d,e,f host name all servers accept traffic for VIPs web URL prefix switch server responds to client IP URL suffix g,h,i Clients slow Substring pattern dumb switch routes outgoing traffic smart URL hashing Issues switch server array server array simply a matter of configuration HTTP parsing cost URL length Advantages delayed binding examples alternatives separate static content from dynamic server failures IBM eNetwork Dispatcher (host-based) reduce content duplication TCP handoff (e.g., LARD) HTTP 1.1 Foundry, Alteon, Arrowpoint, etc. improve server cache performance session locality cascade switches for more complex policies hybrid SLB and URL popular objects The Problem of Sessions The Problem of Sessions LARD LARD Idea: route requests based on request URL, In some cases it is useful for a given client’s requests to “stick” to a given server for the duration of a session. to maximize locality at back-end servers. This is known as session affinity or session persistence . a,b,c LARD predates commercial URL switches, • session state may be bound to a specific server d,e,f and was concurrent with URL-hashing • SSL negotiation overhead proxy cache arrays (CARP). LARD One approach: remember {source, VIP, port} and map to the same front-end g,h,i server. ( a,b,c : 1) Policies ( d,e,f : 2) • The mega-proxy problem : what if the client’s requests filter through ( g,h,i : 3) 1. LB (locality-based) is URL hashing. server array a proxy farm? Can we recognize the source? 2. LARD is locality-aware SLB: route to target’s Alternative: recognize sessions by cookie or SSL session ID. site if there is one and it is not “overloaded”, else LARD front-end maintains an LRU pick a new site for the target. • cookie hashing cache of request targets and their 3. LARD/R augments LARD with replication for • cookie switching also allows differentiated QoS locations, and table of active connections for each server. popular objects. Think “frequent flyer miles”. LARD Performance Study LARD Performance Study LARD Performance Conclusions LARD Performance Conclusions LARD paper compares SLB/WRR and LB with LARD approaches: 1. WRR has the lowest cache hit ratios and the lowest throughput. • simulation study There is much to be gained by improving cache effectiveness. small Rice and IBM web server logs 2. LB* achieve slightly better cache hit ratios than LARD*. jiggle simulation parameters to achieve desired result WRR/GMS lags behind...it’s all about duplicates. • Nodes have small memories with greedy-dual replacement. 3. The caching benefit of LB* is minimal, and LB is almost as good as LB/GC. • WRR combined with global cache-sharing among servers (GMS). WRR/GMS is global cache LRU with duplicates and cache- Locality-* request distribution induces good cache behavior at the sharing cost. back ends: global cache replacement adds little. LB/GC is global cache LRU with duplicate suppression and no 4. Better load balancing in the LARD* strategies dominates the cache-sharing cost. caching benefits of LB*. LARD/R and LARD achieve the best throughput and scalability; LARD/R yields slightly better throughput. 2

LARD Performance: Issues and Questions LARD Performance: Issues and Questions Possible Projects Possible Projects 1. Study the impact of proxy caching on the behavior of the request 1. LB (URL switching) has great cache behavior but lousy distribution policies. throughput. “flatter” popularity distributions Why? Underutilized time results show poor load balancing. 2. Study the behavior of alternative locality- based policies that incorporate better load balancing in the front-end. 2. WRR/GMS has good cache behavior and great load balancing, but not-so-great throughput. How close can we get to the behavior of LARD without putting a URL lookup table in the front-end? Why? How sensitive is it to CPU speed and network speed? E.g., look at URL switching policies in commercial L7 switches. 3. What is the impact of front-end caching? 3. Implement request switching policies in the FreeBSD kernel, and 4. What is the effectivness of bucketed URL hashing policies? measure their performance over GigE. E.g., Foundry: hash URL to a bucket, pick server for bucket based Mods to FreeBSD for recording connection state and forwarding packets are already in place. on load. 4. How to integrate smart switches with protocols for group 5. Why don’t L7 switch products support LARD? Should they? membership or failure detection? [USENIX 2000] : use L4 front end; back ends do LARD handoff. 3

Recommend

More recommend