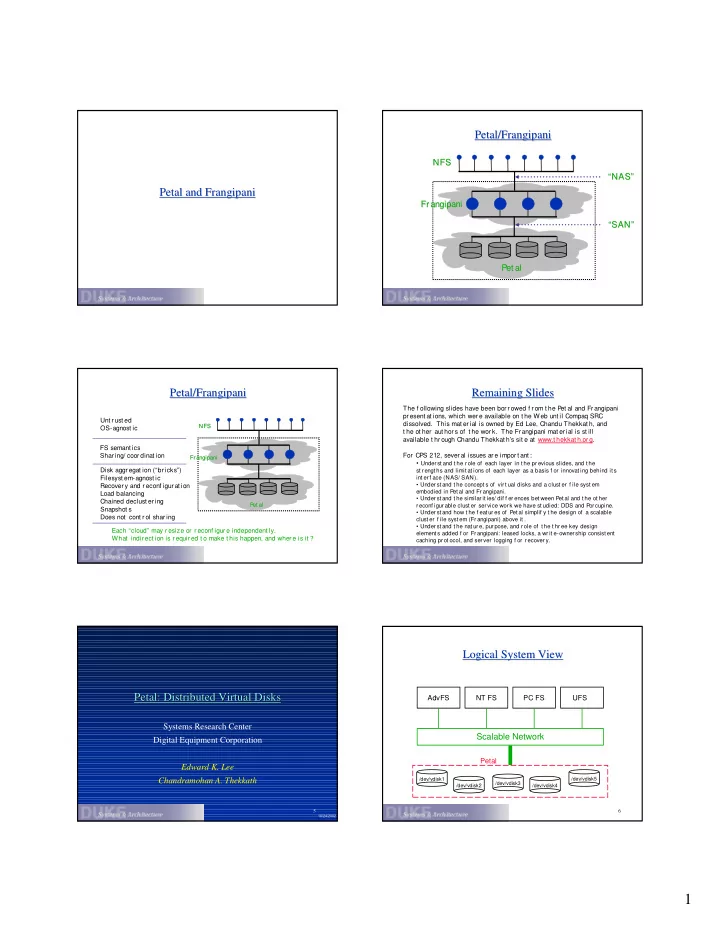

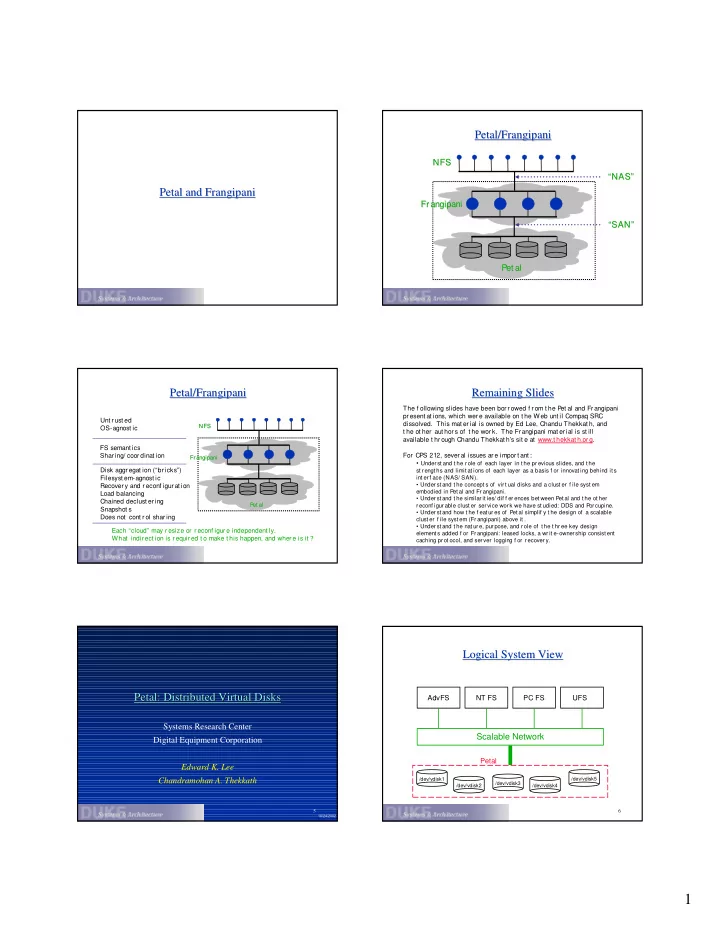

Petal/Frangipani Petal/Frangipani NFS NFS “NAS” “NAS” Petal and Frangipani Petal and Frangipani Fr angipani Fr angipani “SAN” “SAN” Pet al Pet al Petal/Frangipani Remaining Slides Petal/Frangipani Remaining Slides The f ollowing slides have been bor r owed f r om t he Pet al and Fr angipani pr esent at ions, which wer e available on t he Web unt il Compaq SRC Unt r ust ed dissolved. This mat er ial is owned by Ed Lee, Chandu Thekkat h, and NFS NFS OS-agnost ic t he ot her aut hor s of t he wor k. The Frangipani mat er ial is st ill available t hr ough Chandu Thekkat h’s sit e at www.t hekkat h.or g. FS semant ics For CPS 212, sever al issues ar e impor t ant : Shar ing/ coor dinat ion Fr angipani Fr angipani • Under st and t he r ole of each layer in t he pr evious slides, and t he Disk aggr egat ion (“br icks”) st r engt hs and limit at ions of each layer as a basis f or innovat ing behind it s int er f ace (NAS/ SAN). Filesyst em-agnost ic • Under st and t he concept s of vir t ual disks and a clust er f ile syst em Recover y and r econf igur at ion embodied in Pet al and Fr angipani. Load balancing • Under st and t he similar it ies/ dif f er ences bet ween P et al and t he ot her Chained declust er ing P P et al et al r econf igur able clust er ser vice wor k we have st udied: DDS and P or cupine. Snapshot s • Under st and how t he f eat ur es of Pet al simplif y t he design of a scalable Does not cont r ol shar ing clust er f ile syst em (Fr angipani) above it . • Under st and t he nat ur e, pur pose, and r ole of t he t hr ee key design Each “cloud” may r esize or r econf igur e independent ly. element s added f or Fr angipani: leased locks, a wr it e-owner ship consist ent What indir ect ion is r equir ed t o make t his happen, and wher e is it ? caching pr ot ocol, and ser ver logging f or r ecover y. Logical System View Logical System View Petal: Distributed Virtual Disks Petal: Distributed Virtual Disks AdvFS NT FS PC FS UFS Systems Research Center Scalable Network Digital Equipment Corporation Petal Edward K. Lee Chandramohan A. Thekkath /dev/vdisk1 /dev/vdisk5 /dev/vdisk3 /dev/vdisk2 /dev/vdisk4 5 6 10/24/2002 1

Physical System View Virtual Disks Physical System View Virtual Disks Each disk provides 2^64 byte address space. Created and destroyed on demand. Parallel Database or Cluster File System Allocates disk storage on demand. Snapshots via copy-on-write. Online incremental reconfiguration. Scalable Network /dev/shared1 Petal Server Petal Server Petal Server Petal Server 7 8 Virtual to Physical Translation Virtual to Physical Translation Global State Management Global State Management (vdiskID, offset) (server, disk, diskOffset) Based on Leslie Lamport’s Paxos algorithm. Server 0 Server 1 Server 2 Server 3 Global state is replicated across all servers. Consistent in the face of server & network failures. Virtual Disk Directory vdiskID A majority is needed to update global state. Any server can be added/removed in the presence of failed servers. offset GMap PMap0 PMap1 PMap2 PMap3 (disk, diskOffset) 9 10 Fault- Fault -Tolerant Global Operations Tolerant Global Operations Data Placement & Redundancy Data Placement & Redundancy Create/Delete virtual disks. Supports non-redundant and chained-declustered virtual disks. Snapshot virtual disks. Parity can be supported if desired. Add/Remove servers. Chained-declustering tolerates any single component failure. Reconfigure virtual disks. Tolerates many common multiple failures. Throughput scales linearly with additional servers. Throughput degrades gracefully with failures. 11 12 2

Chained Declustering Declustering Chained Declustering Declustering Chained Chained Server0 Server1 Server2 Server3 Server0 Server1 Server2 Server3 D0 D1 D2 D3 D0 D1 D2 D3 D3 D0 D1 D2 D3 D0 D1 D2 D4 D5 D6 D7 D4 D5 D6 D7 D7 D4 D5 D6 D7 D4 D5 D6 13 14 The Prototype The Prototype The Prototype The Prototype Digital ATM network. • 155 Mbit/s per link. ……… src-ss1 src-ss2 src-ss8 8 AlphaStation Model 600. /dev/vdisk1 /dev/vdisk1 /dev/vdisk1 • 333 MHz Alpha running Digital Unix. 72 RZ29 disks. Digital ATM Network (AN2) • 4.3 GB, 3.5 inch, fast SCSI (10MB/s). /dev/vdisk1 • 9 ms avg. seek, 6 MB/s sustained transfer rate. ……… Unix kernel device driver. petal1 petal2 petal8 User-level Petal servers. 15 16 Throughput Scaling Throughput Scaling Virtual Disk Reconfiguration Virtual Disk Reconfiguration 8 servers 30 6 servers 8 25 Throughput in MB/s LINEAR Throuput Scale-up 20 6 512B Rd 8KB Rd 15 4 64KB Rd 10 512B Wr 8KB Wr 2 5 64KB Wr 0 0 0 1 2 3 4 5 6 0 2 4 6 8 Elapsed Time in Minutes Number of Servers virtual disk w/ 1GB of allocated storage 8KB reads & writes 17 18 3

Why Not An Old File System on Petal? Frangipani: A Scalable Distributed File Why Not An Old File System on Petal? Frangipani: A Scalable Distributed File System System Traditional file systems (e.g., UFS, AdvFS) cannot share a block device The machine that runs the file system can become a C. A. Thekkath, T. Mann, and E. K. Lee bottleneck Systems Research Center Digital Equipment Corporation Frangipani Ease of Administration Frangipani Ease of Administration Frangipani machines are modular Behaves like a local file system • can be added and deleted transparently • multiple machines cooperatively manage a Petal disk Common free space pool • users on any machine see a consistent • users don’t have to be moved view of data Exhibits good performance, scaling, and load balancing Automatically recovers from crashes Easy to administer Consistent backup without halting the system Components of Frangipani Components of Frangipani Locks Locks Multiple reader/single writer File system core Locks are moderately coarse-grained • implements the Digital Unix vnode interface • protects entire file or directory • uses the Digital Unix Unified Buffer Cache • exploits Petal’s large virtual space Dirty data is written to disk before lock is given to another machine Locks with leases Each machine aggressively caches locks Write-ahead redo log • uses lease timeouts for lock recovery 4

Logging Recovery Logging Recovery Frangipani uses a write ahead redo log for metadata • log records are kept on Petal Recovery is initiated by the lock service Data is written to Petal • on sync, fsync, or every 30 seconds • on lock revocation or when the log wraps Recovery can be carried out on any machine • log is distributed and available via Petal Each machine has a separate log • reduces contention • independent recovery 5

Recommend

More recommend