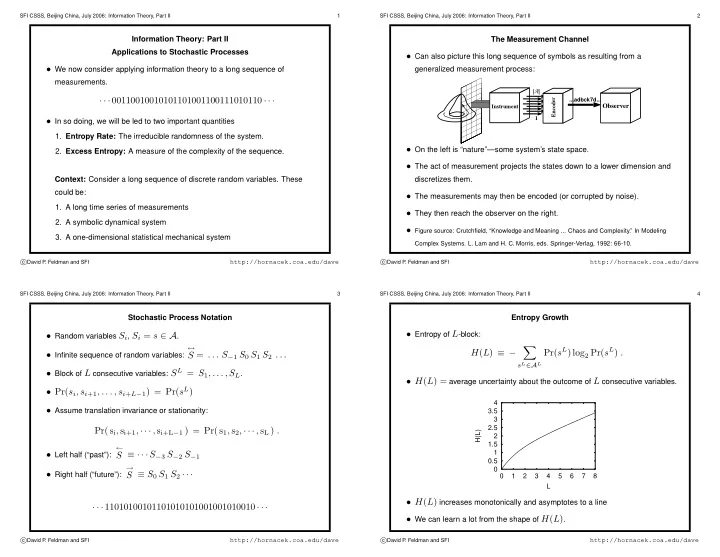

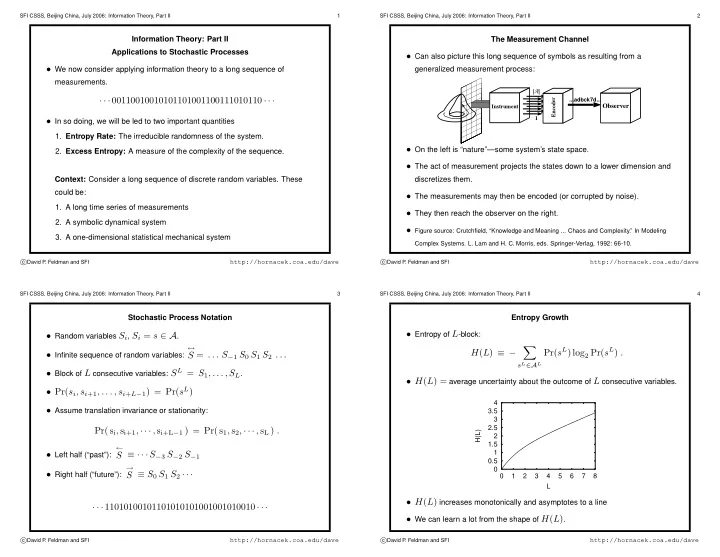

SFI CSSS, Beijing China, July 2006: Information Theory, Part II 1 SFI CSSS, Beijing China, July 2006: Information Theory, Part II 2 Information Theory: Part II The Measurement Channel Applications to Stochastic Processes • Can also picture this long sequence of symbols as resulting from a • We now consider applying information theory to a long sequence of generalized measurement process: measurements. | A | Encoder · · · 00110010010101101001100111010110 · · · ...adbck7d... Observer Instrument 1 • In so doing, we will be led to two important quantities 1. Entropy Rate: The irreducible randomness of the system. • On the left is “nature”—some system’s state space. 2. Excess Entropy: A measure of the complexity of the sequence. • The act of measurement projects the states down to a lower dimension and Context: Consider a long sequence of discrete random variables. These discretizes them. could be: • The measurements may then be encoded (or corrupted by noise). 1. A long time series of measurements • They then reach the observer on the right. 2. A symbolic dynamical system • Figure source: Crutchfield, “Knowledge and Meaning ... Chaos and Complexity.” In Modeling 3. A one-dimensional statistical mechanical system Complex Systems. L. Lam and H. C. Morris, eds. Springer-Verlag, 1992: 66-10. http://hornacek.coa.edu/dave http://hornacek.coa.edu/dave � David P � David P c . Feldman and SFI c . Feldman and SFI SFI CSSS, Beijing China, July 2006: Information Theory, Part II 3 SFI CSSS, Beijing China, July 2006: Information Theory, Part II 4 Stochastic Process Notation Entropy Growth • Entropy of L -block: • Random variables S i , S i = s ∈ A . ↔ � Pr( s L ) log 2 Pr( s L ) . H ( L ) ≡ − • Infinite sequence of random variables: S = . . . S − 1 S 0 S 1 S 2 . . . s L ∈A L • Block of L consecutive variables: S L = S 1 , . . . , S L . • H ( L ) = average uncertainty about the outcome of L consecutive variables. • Pr( s i , s i +1 , . . . , s i + L − 1 ) = Pr( s L ) 4 • Assume translation invariance or stationarity: 3.5 3 2.5 Pr( s i , s i+1 , · · · , s i+L − 1 ) = Pr( s 1 , s 2 , · · · , s L ) . H(L) 2 1.5 ← 1 • Left half (“past”): S ≡ · · · S − 3 S − 2 S − 1 0.5 → 0 • Right half (“future”): S ≡ S 0 S 1 S 2 · · · 0 1 2 3 4 5 6 7 8 L • H ( L ) increases monotonically and asymptotes to a line · · · 11010100101101010101001001010010 · · · • We can learn a lot from the shape of H ( L ) . http://hornacek.coa.edu/dave http://hornacek.coa.edu/dave � David P � David P c . Feldman and SFI c . Feldman and SFI

SFI CSSS, Beijing China, July 2006: Information Theory, Part II 5 SFI CSSS, Beijing China, July 2006: Information Theory, Part II 6 Entropy Rate Entropy Rate, continued • Let’s first look at the slope of the line: H(L) • Slope of the line to which H ( L ) asymptotes is known as the entropy rate: + h L µ E h µ = L →∞ h µ ( L ) . lim H(L) E • h µ ( L ) = H [ S L | S 1 S 1 . . . S L − 1 ] • I.e., h µ ( L ) is the average uncertainty of the next symbol, given that the previous L symbols have been observed. L 0 • Slope of H ( L ) : h µ ( L ) ≡ H ( L ) − H ( L − 1) • Slope of the line to which H ( L ) asymptotes is known as the entropy rate: h µ = L →∞ h µ ( L ) . lim http://hornacek.coa.edu/dave http://hornacek.coa.edu/dave � David P � David P c . Feldman and SFI c . Feldman and SFI SFI CSSS, Beijing China, July 2006: Information Theory, Part II 7 SFI CSSS, Beijing China, July 2006: Information Theory, Part II 8 Interpretations of Entropy Rate How does h µ ( L ) approach h µ ? • Uncertainty per symbol. • For finite L , h µ ( L ) ≥ h µ . Thus, the system appears more random than it is. • Irreducible randomness: the randomness that persists even after accounting H(1) for correlations over arbitrarily large blocks of variables. h (L) µ • The randomness that cannot be “explained away”. • Entropy rate is also known as the Entropy Density or the Metric Entropy. E h µ • h µ = Lyapunov exponent for many classes of 1D maps. H ( L ) • The entropy rate may also be written: h µ = lim L →∞ . L L 1 • h µ is equivalent to thermodynamic entropy. • We can learn about the complexity of the system by looking at how the entropy density converges to h µ . • These limits exist for all stationary processes. http://hornacek.coa.edu/dave http://hornacek.coa.edu/dave � David P � David P c . Feldman and SFI c . Feldman and SFI

SFI CSSS, Beijing China, July 2006: Information Theory, Part II 9 SFI CSSS, Beijing China, July 2006: Information Theory, Part II 10 The Excess Entropy Excess Entropy: Other expressions and interpretations Mutual information H(1) h (L) µ • One can show that E is equal to the mutual information between the “past” and the “future”: E ↔ h µ � � Pr( s ) ← → ↔ � E = I ( S ; S ) ≡ Pr( s ) log 2 . ← → Pr( s )Pr( s ) ↔ s } { L 1 • E is thus the amount one half “remembers” about the other, the reduction in • The excess entropy captures the nature of the convergence and is defined uncertainty about the future given knowledge of the past. as the shaded area above: ∞ • Equivalently, E is the “cost of amnesia:” how much more random the future � E ≡ [ h µ ( L ) − h µ ] . appears if all historical information is suddenly lost. L =1 • E is thus the total amount of randomness that is “explained away” by considering larger blocks of variables. http://hornacek.coa.edu/dave http://hornacek.coa.edu/dave � David P � David P c . Feldman and SFI c . Feldman and SFI SFI CSSS, Beijing China, July 2006: Information Theory, Part II 11 SFI CSSS, Beijing China, July 2006: Information Theory, Part II 12 Excess Entropy: Other expressions and interpretations Excess Entropy Summary Geometric View • E is the y -intercept of the straight line to which H ( L ) asymptotes. • Is a structural property of the system — measures a feature complementary • E = lim L →∞ [ H ( L ) − h µ L ] . to entropy. H(L) • Measures memory or spatial structure. + h L µ E • Lower bound for statistical complexity, minimum amount of information H(L) needed for minimal stochastic model of system E L 0 http://hornacek.coa.edu/dave http://hornacek.coa.edu/dave � David P � David P c . Feldman and SFI c . Feldman and SFI

SFI CSSS, Beijing China, July 2006: Information Theory, Part II 13 SFI CSSS, Beijing China, July 2006: Information Theory, Part II 14 Example I: Fair Coin Example II: Periodic Sequence 4.5 16 4 3.5 14 H(L): Fair Coin 3 H(L): Biased Coin, p=.7 12 2.5 H(L) 10 2 H(L) 8 1.5 H(L) E + h µ L 1 6 0.5 4 0 0 2 4 6 8 10 12 14 16 18 2 L 0 0 2 4 6 8 10 12 14 1.4 L 1.2 h µ (L) 1 • For fair coin, h µ = 1 . h µ (L) 0.8 0.6 • For the biased coin, h µ ≈ 0 . 8831 . 0.4 0.2 • For both coins, E = 0 . 0 0 2 4 6 8 10 12 14 16 18 • Note that two systems with different entropy rates have the same excess L • Sequence: . . . 1010111011101110 . . . entropy. http://hornacek.coa.edu/dave http://hornacek.coa.edu/dave � David P � David P c . Feldman and SFI c . Feldman and SFI SFI CSSS, Beijing China, July 2006: Information Theory, Part II 15 SFI CSSS, Beijing China, July 2006: Information Theory, Part II 16 Example II, continued Example III: Random, Random, XOR 14 12 10 H(L) 8 • Sequence: . . . 1010111011101110 . . . 6 • h µ ≈ 0 ; the sequence is perfectly predictable. 4 H(L) E + h µ L 2 • E = log 2 16 = 4 : four bits of phase information 0 0 2 4 6 8 10 12 14 16 18 L • For any period- p sequence, h µ = 0 and E = log 2 p . 1.2 1 h µ (L) 0.8 h µ (L) 0.6 For more than you probably ever wanted to know about periodic sequences, see Feldman and 0.4 Crutchfield, Synchronizing to Periodicity: The Transient Information and Synchronization Time of 0.2 Periodic Sequences. Advances in Complex Systems . 7 (3-4): 329-355, 2004. 0 0 2 4 6 8 10 12 14 16 18 L • Sequence: two random symbols, followed by the XOR of those symbols. http://hornacek.coa.edu/dave http://hornacek.coa.edu/dave � David P � David P c . Feldman and SFI c . Feldman and SFI

Recommend

More recommend